Tech heads sign robo-pledge

Leaders of the tech world have signed a global pledge against autonomous weapons.

Leaders of the tech world have signed a global pledge against autonomous weapons.

Prominent CEOs, engineers and scientists from the technology industry have signed a document saying they will “neither participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons”.

The pledge was signed by 150 companies and more than 2,400 individuals from 90 countries working in artificial intelligence (AI) and robotics at the 2018 International Joint Conference on Artificial Intelligence (IJCAI).

Corporate signatories include Google DeepMind, University College London, the XPRIZE Foundation, ClearPath Robotics/OTTO Motors, the European Association for AI, and the Swedish AI Society. Individuals include head of research at Google.ai Jeff Dean, AI pioneers Stuart Russell, Yoshua Bengio, Anca Dragan and Toby Walsh, and British Labour MP Alex Sobel.

“We, the undersigned, call upon governments and government leaders to create a future with strong international norms, regulations and laws against lethal autonomous weapons,” the document states.

“We ask that technology companies and organisations, as well as leaders, policymakers, and other individuals, join us in this pledge.”

MIT physics professor Max Tegmark, president of the Future of Life Institute that organised the pledge, said AI leaders are implementing a policy that politicians have thus far failed to put into effect.

“AI has huge potential to help the world – if we stigmatise and prevent its abuse,” Dr Tegmark said.

“AI weapons that autonomously decide to kill people are as disgusting and destabilising as bioweapons, and should be dealt with in the same way.”

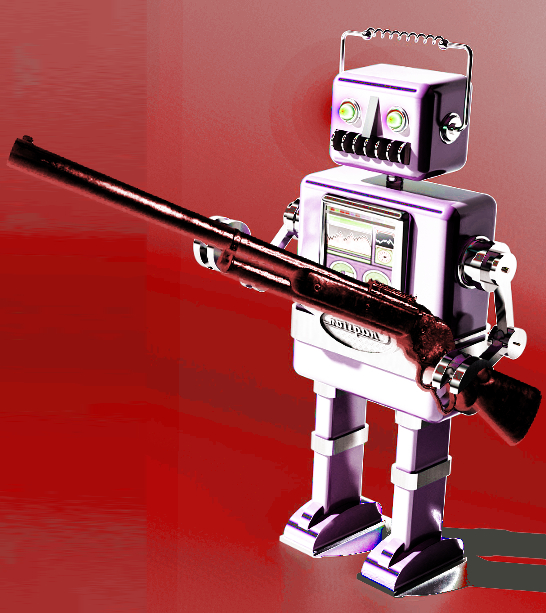

Lethal autonomous weapons systems (LAWS) – also dubbed ‘killer robots’ – are weapons that can identify, target, and kill a person, without a human involved.

That is, no person makes the final decision to authorise lethal force: the decision and authorisation about whether someone will die is left to the autonomous weapons system.

This does not include today’s drones, which are under human control; nor autonomous systems that merely defend against other weapons.

The pledge begins with the statement: “Artificial intelligence is poised to play an increasing role in military systems. There is an urgent opportunity and necessity for citizens, policymakers, and leaders to distinguish between acceptable and unacceptable uses of AI.”

A co-organiser of the pledge, professor of artificial intelligence at the University of New South Wales Toby Walsh, says there are thorny ethical issues surrounding LAWS.

“We cannot hand over the decision as to who lives and who dies to machines,” he said.

“They do not have the ethics to do so. I encourage you and your organizations to pledge to ensure that war does not become more terrible in this way.”

In addition to the troubling ethical questions surrounding lethal autonomous weapons, many advocates of an international ban on LAWS are concerned that they will be difficult to control – easier to hack, more likely to end up on the black market, and easier for terrorists and despots to obtain – which could become destabilising for all countries.

The next UN meeting on LAWS will be held in August 2018, and signatories of the pledge hope their pledge will encourage lawmakers to develop a commitment to an international agreement between countries.

Some potential negative effects are dramatised in the video below.

Print

Print